Monoliths can do Multicloud

Three years ago, at Happy Scribe, our transcoding needs had outgrown the cozy confines of Heroku, so we made the leap to serverless with AWS Fargate. The promise was one of infinite scalability and cost-effectiveness. In reality, though, we faced a trade-off that didn't quite sit right with us: with bandwidth and compute savings came complexity and a sluggish development cycle.

Just a few months ago, we took a hard look at our setup. It was eating up a huge chunk of our budget and had become the bottleneck of the whole transcription pipeline, slowing down half of our files. It didn't make sense, our state-of-the-art speech recognition was quicker and cheaper than just converting video formats. The transcoding code? It had been tweaked maybe five times in three years. This wasn't for lack of necessity, but because the prospect of wading through the painful development process was a deterrent in itself. Clearly, something needed to change.

Today, we've taken a step that some may see as unorthodox: we've adopted what we like to call a Multicloud Monolith. This isn't a story of a grand revolution, but one of a deliberate, practical step towards streamlining our operations.

Our transcoding is now four times faster, video processing and streaming costs have plummeted to a twentieth of what they were, and we can iterate on the code locally in just a couple of seconds. All this while keeping the core of our app in good-old Heroku.

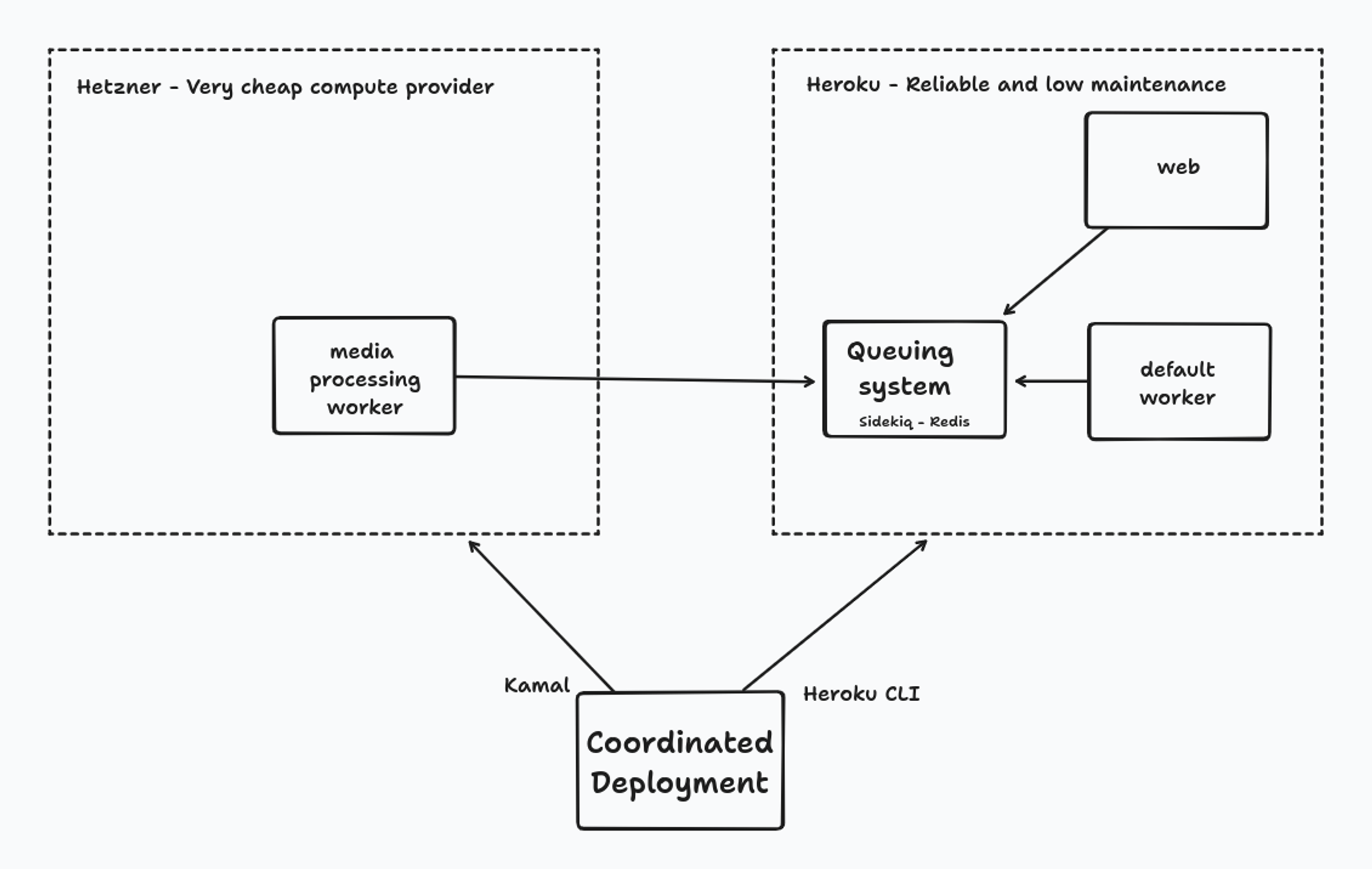

Let’s take a look at our new setup

We use Hetzner for the heavy lifting because it's cheap, doesn’t charge for bandwidth, and it gets the job done. The workers in Hetzner have a copy of all our Rails monolith code and all the necessary configuration to connect to our main infrastructure in Heroku.

For everything else, Heroku is our home base. It’s where our web and main workers live, with Sidekiq and Redis to manage the background jobs.

To deploy the code, we simply have two concurrent processes, one runs the Heroku CLI and the other uses Kamal to deploy to Hetzner.

In terms of capacity, Hetzner is so cheap that we don’t need autoscaling. We are just very overprovisioned and that’s it.

With this setup, thanks to Rails and Sidekiq, running jobs in the Hetzner infrastructure is as simple as:

class TranscodingJob < ApplicationJob

queue_as :hetzner_video_processing # Just a different queue

def perform

# CPU-intensive work here

end

end

TranscodingJob.perform_later

We've navigated a few challenges along the way. Coordinating deployments across platforms, ensuring reliability with smart fallbacks, and isolating code execution to prevent issues. We're considering a deep dive into these solutions in a future post if there's interest. Drop me a line at yoel@happyscribe.co if that's something you'd want to read!

Progressive enhancement on the infrastructure side

In the realm of web application development, Hotwire stands out for its capacity to incrementally enhance the interactivity of applications. It eschews the complexity of single-page applications, allowing us to introduce dynamic, interactive elements while maintaining the development ease of traditional server-rendered pages. The best part is that for those pages that don’t need interactivity, we can just stick to good-old Rails and ERB.

We got really inspired by this approach and translated it to our infrastructure strategy. Just as Hotwire allows for the selective enhancement of web pages, the Multicloud Monolith enhances specific aspects of our infrastructure as needed. We start with the simplicity and speed of development on Heroku, optimizing for happiness and agility. As certain processes become more critical and demanding, we selectively move them to more powerful setups, like Hetzner, for greater efficiency and scalability.

In essence, our Multicloud Monolith is to microservices what Hotwire is to single-page applications. Both approaches avoid the pitfalls of diving into overly complex architectures from the start. Instead, they allow for strategic, incremental enhancements. This methodology ensures that we don't overburden our system with unnecessary complexities, maintaining a fine balance between agility and robustness. It's a pragmatic approach that scales smartly, enhancing our system where it counts the most.

Alternatives We've Discarded for now

A separated (micro)service: It’s almost never just one

Transcoding videos to mp4, extracting the audios for transcription, hard-coding subtitles… Everything touching videos was happening on Fargate because of the bandwidth limitations of Heroku. Meaning, if we went down the microservices rabbit hole, we would soon have 5–10 services. That sounds even worse than what we had on Fargate.

On the other hand, as part of this cloud migration we moved our storage to Cloudflare R2 since we couldn't afford the data transfer costs from AWS to Hetzner. However, a few of our most important customers still need their files to be streamed through AWS. They have strict firewalls, and we are not going to be the ones telling them to allowlist a less-known storage provider just for us. Thanks to Active Storage, our Rails monolith can easily route different customer files through different data paths while keeping all business logic central and accessible. With microservices, this would likely mean a very convoluted storage configuration.

We're not completely against splitting services, though. AI's central to our mission, hence we have a dedicated service written in Python and deployed with Kubernetes in a different cloud provider, built by our AI team. A transcoding team, though? Unlikely. No transcoding team, no transcoding service.

Full Migration to Hetzner: Tempting, but not for us (yet?)

We can't help but tip our hats to Basecamp's recent cloud exodus using Kamal—it's the kind of move that gets the tech team at Happy Scribe a little starry-eyed. We were also extremely lucky they released Kamal just when we were about to start working on this project.

The idea of following in those footsteps and moving our entire operation to Hetzner is certainly tempting. But here's the thing: we're not Basecamp. They have around 30 programmers, and we are 8. Our ops team is, well, non-existent. A background worker going down isn't a crisis—the jobs are swiftly picked up by another worker. But web service downtime? No, thank you. For now, we're keeping the web where we know it's safe, and it's solid—on Heroku. This leaves the eight of us free to do what we do best: delivering real value to our customers.

Parting Thoughts

Our multicloud model could be your stepping stone away from the cloud. It slashes costs without adding too much complexity and keeps your team focused on what they do best. If we were to plan a full migration, we would definitely start like this, and then gradually migrate all the rest (web, database and so on). We hope our journey offers you insights whether you are going for a full or partial migration.

Did you find this interesting? We’re hiring. Who knows, with a bigger team, that complete cloud migration might just be on the horizon.

Yoel Cabo

Over my three-year journey as a Senior Software Engineer at Happy Scribe, I've touched every corner of our technology landscape. I've brought our transcription pipeline to a 99.8% reliability rate, contributed to building and architecting a system that smoothly coordinates over 500 transcribers with a small operations team, and built our AI serving infrastructure to be fast and cost-effective. Beyond that, I'm in charge of the technology behind this blog, ensuring our digital presence is as robust and reliable as our services.